Multiple Linear Regression T Test In R

Data: 3.09.2018 / Rating: 4.6 / Views: 580Gallery of Video:

Gallery of Images:

Multiple Linear Regression T Test In R

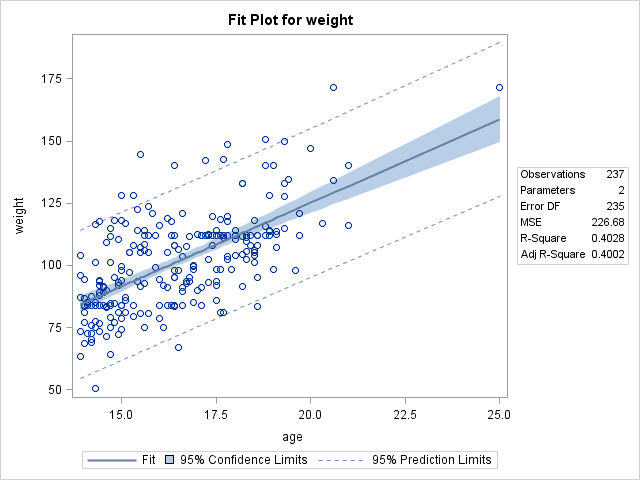

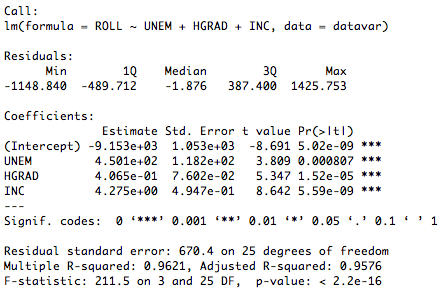

In general, an Ftest in regression compares the fits of different linear models. Unlike ttests that can assess only one regression coefficient at a time, the Ftest. Similar to simple linear regression, mod1 is the name of the object that we would like to store the model in, lm stands for linear model and is the R command for running linear regression, Y is our dependent or outcome variable, and X1, X2, and X3 are independent or predictor variables. Here, coefTest performs an Ftest for the hypothesis that all regression coefficients (except for the intercept) are zero versus at least one differs from zero, which essentially is the hypothesis on the model. It returns, the pvalue, F, the Fstatistic, and d, the numerator degrees of freedom. The Fstatistic and pvalue are the same as the ones in the linear regression display and anova for. Hypothesis Test for Regression Slope. This lesson describes how to conduct a hypothesis test to determine whether there is a significant linear relationship between an independent variable X and a dependent variable Y. The test focuses on the slope of the regression line Y 0 1 X. where 0 is a constant, 1 is the slope (also called the regression coefficient), X is the value of. The ttest that is used by R does not, by default, assume identical standard deviations in the two categories, although in textbooks this is a common assumption. test code, you can recover the same pvalues as obtained from an ANOVA implementation In a simple linear regression, this test is not really interesting since it just duplicates the information given by the ttest, available in the coefficient table. The Fstatistic becomes more important once we start using multiple predictors as in multiple linear regression. The lackoffit test for simple linear regression discussed in Simple Linear Regression Analysis may also be applied to multiple linear regression to check the appropriateness of the fitted response surface and see if a higher order model is required. Linear regression model j j k i Y j Review of Multiple Regression Page 4 The above formula has several interesting implications, which we will discuss shortly. The F statistic (with df K, NK1) can be used to test the hypothesis that. An excellent review of regression diagnostics is provided in John Fox's aptly named Overview of Regression Diagnostics. Fox's car package provides advanced utilities for regression modeling. # Assume that we are fitting a multiple linear regression. Introduction to multiple regression in r. The data set is discussed and exploratory data analysis is performed here using correlation matrix and scatterplot matrix. For simple linear regression (i. one independent variable), R2 is the same as the correlation coecient, Pearsons r, squared. Elkink (UCD) t andFtests 5April2012 1525 The ANOVA for the multiple linear regression using only HSM, HSS, and HSE is very significant at least one of the regression coefficients is significantly different from zero. A linear regression is a statistical model that analyzes the relationship between a response variable (often called y) and one or more variables and their interactions (often called x or explanatory variables). Multiple linear regression is an extension of simple linear regression used to predict an outcome variable (y) on the basis of multiple distinct predictor variables (x). With three predictor variables (x), the prediction of y is expressed by the following equation: y b0 b1x1 b2x2 b3x3 Note: Don't worry that you're selecting Statistics Linear models and related Linear regression on the main menu, or that the dialogue boxes in the steps that follow have the title, Linear regression. In R, multiple linear regression is only a small step away from simple linear regression. In fact, the same lm() function can be used for this technique, but with the addition of a one or more predictors. Linear regression models use the ttest to estimate the statistical impact of an independent variable on the dependent variable. Researchers set the maximum threshold at 10 percent, with lower values indicates a stronger statistical link. Preface There are many books on regression and analysis of variance. These books expect different levels of preparedness and place different emphases on the material. To complete a linear regression using R it is first necessary to understand the syntax for Multiple RSquared: 0. 9973 Fstatistic: 1486 on 1 and 3 DF, pvalue: 3. 842e05 We can use a standard ttest to evaluate the slope and intercept. The confidence interval Both models have significant models (see the FStatistic for Regression) and the Multiple Rsquared and Adjusted Rsquared are both exceptionally high (keep in mind, this is a simplified example). Or copy paste this link into an email or IM. Linear regression models assume a linear relationship between the response and predictors. But in some cases, the true relationship between the response and the predictors may be nonlinear. We can accomodate certain nonlinear relationships by transforming variables (i. log(x), sqrt(x) ) or using polynomial regression. In R, multiple linear regression is only a small step away from simple linear regression. In fact, the same lm() function can be used for this technique, but with the addition of a one or more predictors. i fit a multiple regression on this with: fit lm( y x1 x2 x3, table. b1 ) now i want to calculate t statistics for testing hypothesis individuals Beta10, Beta20, Beta30 in R. Now the linear model is built and we have a formula that we can use to predict the dist value if a corresponding speed is known. Develop the model on the training data and use it to predict the distance on test data# Build the model on training data 15. 84 on 38 degrees of freedom# Multiple Rsquared: 0. Multiple Regression Analysis using SPSS Statistics Introduction. Multiple regression is an extension of simple linear regression. It is used when we want to predict the value of a variable based on the value of two or more other variables. Methods for multiple correlation of several variables simultaneously are discussed in the Multiple regression chapter. Pearson correlation It is a parametric test, and assumes that the data are linearly related and that the residuals are normally distributed. F test and t test are performed in regression models. In linear model output in R, we get fitted values and expected values of response variable. Suppose I have height as explanatory variable and body weight as response variable for 100 data points. On this page learn about multiple regression analysis including: how to setup models, extracting the coefficients, beta coefficients and R squared values. There is a short section on graphing but see the main graph page for more detailed information. The appropriateness of the multiple regression model as a whole can be tested by the Ftest in the ANOVA table. A significant F indicates a linear relationship between Y and at least one of the X's. A significant F indicates a linear relationship between Y and at least one of the X's. For multiple linear regression with intercept (which includes simple linear regression), it is defined as r 2 SSM SST. In either case, R 2 indicates the proportion of variation in the yvariable that is due to variation in the xvariables. The function lm can be used to perform multiple linear regression in R and much of the syntax is the same as that used for fitting simple linear The output shows the results of the partial Ftest. 0647) we cannot reject the null hypothesis (3 4 The use and interpretation of r 2 (which we'll denote R 2 in the context of multiple linear regression) remains the same. However, with multiple linear regression we can also make use of an adjusted R 2 value, which is useful for model building purposes. We'll explore this measure further in Lesson 10. This feature is not available right now. Multiple linear regression is the most common form of linear regression analysis. As a predictive analysis, the multiple linear regression is used to explain the relationship between one continuous dependent variable and two or more independent variables. The tests are used to conduct hypothesis tests on the regression coefficients obtained in simple linear regression. A statistic based on the distribution is used to test the twosided hypothesis that the true slope, , equals some constant value. Testing in Multiple Regression Analysis 29 If t i t 2, i1, 2, the value of the test statistics has fallen in the field of accepting null hypothesis. Another term, multivariate linear regression, refers to cases where y is a vector, i. , the same as general linear regression. General linear models [ edit The general linear model considers the situation when the response variable is not a scalar (for each observation) but a vector, y i. In linear least squares multiple regression with an estimated intercept term, The coefficient of determination R 2 is a measure of the global fit of the model. Specifically, R 2 is an element of [0, 1 ttest of: Notes. In R, when I have a (generalized) linear model (lm, glm, gls, glmm, ), how can I test the coefficient (regression slope) against any other value than 0? In the summary of the model, ttest results of the coefficient are automatically reported, but only for comparison with 0. 05, they are both statistically significant in the multiple linear regression model of stackloss. Further detail of the summary function for linear regression model can be found in the R documentation. These phrases have standard meaning in Statistics which is consistent with most literature you may find on Linear Regression. In short the tstatistic is useful for. R multiple linear regression models with two explanatory variables can be given as: y i 0 1 x 1i 2 x 1i i Here, the i th data point, y i, is determined by the levels of the two continuous explanatory variables x 1i and x 1i by the three parameters 0, 1, and 2. R provides comprehensive support for multiple linear regression. The topics below are provided in order of increasing complexity. 4 Hypothesis testing in the multiple regression model Ezequiel Uriel and the alternative hypothesis 2 Test statistic 2 Decision rule 3 4. 2 Testing hypotheses using the t test 5 Test of a single parameter 5 Confidence intervals 16 Testing hypothesis about a single linear combination of the parameters 17. overall test of significance of the regression parameters We test H0: 2 0 and 3 0 versus Ha: at least one of 2 and 3 does not equal zero. Assumptions of Linear Regression. Building a linear regression model is only half of the work. In order to actually be usable in practice, the model should conform to the assumptions of linear regression. Assumption 1 Do a correlation test on the X variable and the residuals. Clear examples for R statistics. Multiple Regression, multiple correlation, stepwise model selection, model fit criteria, AIC, AICc, BIC. A histogram of residuals from a linear model. The distribution of these residuals should be approximately normal. or lrtest in lmtest can be used for the likelihood ratio test. Decide whether there is a significant relationship between the variables in the linear regression model of the data set faithful at. Solution We apply the lm function to a formula that describes the variable eruptions by the variable waiting, and save the linear regression model in a new variable eruption. The use and interpretation of r 2 (which we'll denote R 2 in the context of multiple linear regression) remains the same. However, with multiple linear regression we can also make use of an adjusted R 2 value, which is useful for model building purposes.

Related Images:

- Kon tiki 1951

- Divina Commedia Sapegno Pdf

- Css3 the missing manual

- Kiara I Know That Girl

- Lg 1080 demo

- Ben hur dublado

- Juego de tronos temporada

- Blink 182 blink 182 greatest hits

- Opie and anthony 05 02

- Top 40 week 2013 dmt

- Hacking Secrets Exposed Pdf Free Download

- Cisco Nexus Vrf Context Management

- Class 10 Ncert English Literature Reader Answers

- Breaking bad season 6 episode 4

- Diary of wimpy kid

- Rise of the plant of the

- 1600 penn s01E02

- Boutique hotel design standards

- Who do you s09e03

- The 12 Biggest Lies

- Americas next top model s17e06

- Chi chi iro

- Va indie 2018

- Konica Minolta Bizhub C35 Service Manual

- Yu gi oh tag force psp

- Warhammer mark of chaos

- A warning from history

- The vampire diaries s04e18 afg

- Juicy Cider

- Drawing Down the Moon writer Margot Adler

- Suzuki van van manual

- Elektric music lifestyle download

- Writing Papers In Biological Sciences Mcmillan

- Rich gang mp3

- Spellforce the order of dawn

- New girl s03 french

- Chinese ghost story 3

- Sharp Vl Ax1u Digital Camcorder Service Manual

- Va the best of rock

- Emaths Ks2 Sats Papers

- 2011 autocad keygen

- The Fifth Quarter An Offal Cookbook

- 6e james stewart

- Lincoln 2018 dvdscr xvid

- Misfit season 4

- Brooklyn taxi s01e05

- Educational management tony bush

- Naruto shippuden the movie 4

- Show goes on

- Gjoe the rise of cobra

- Hayatus sahaba arabic vol 1

- Kitab asas panduan fardhu ain lengkap

- Brooklyn nine web 720 aac

- Sociology of literature

- Custom pc march

- Boyce avenue acoustic sessions 1

- Star wars cover

- Materialise 3matic

- Que es computacion en la nube pdf

- Local Anesthesia Sterilization And Oral Surgery

- The russian arc

- Mentalist complete 720p

- Rick ross oyster perpetual

- Yellow flicker beat

- Lesson Plans Spanish 2 Expresate

- Scholastic Journalism 12 edition

- Seihaiotachi No Soseiki Doragono To Kenja No Ishi

- Dj player 2018

- Ugly aur pagli

- Batman serie french

- Emulator playstation 1

- Pressure Washer Repair Glen Burnie

- Austin and ally s01e04

- Bone alphabet pdf

- Ford Tractor For Sale California

- Gross motor skills activities for preschool